|

Fabrice NEYRET - Maverick team, LJK, at INRIA-Montbonnot |

Nowadays 3D applications such as games and movies production

rely on highly detailed objects settling in large scenes. To avoid

the costly rendering of distant objects whose many details fall in

the same pixels - as well as too avoid aliasing

artifacts – distant objects are simplified into

« levels

of details » meshes. But micro-details (e.g.

shining ice crystal) can influence the whole pixel value, and a rough

or corrugated geometry still reflects differently even when its shape

seems flat. Similarly, a distant grid looks like a semi-transparent

panel : even if smaller than pixels bars cannot be simply

erased. This illustrates that the sub-pixel information can induce

emerging effects which should be explicitly expressed into a shader

function rather than being totally suppressed.

Our team has

published many researches

on appearance filtering. Some aimed at deep filtering of

well-characterized objects (such as E.Bruneton work on ocean waves or

trees), and some aimed at less deep filtering of more general

surfaces (E.Heitz work).

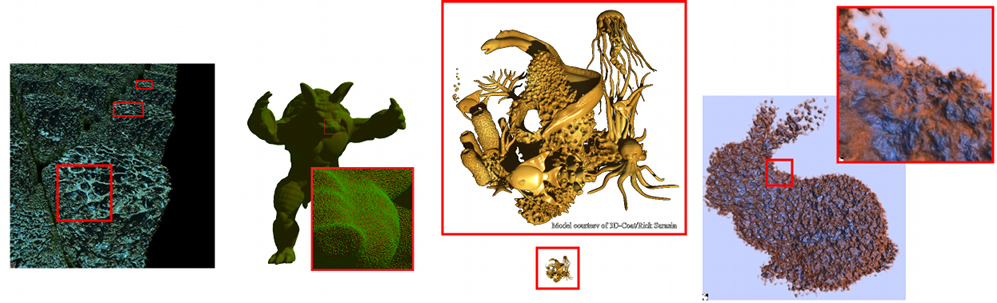

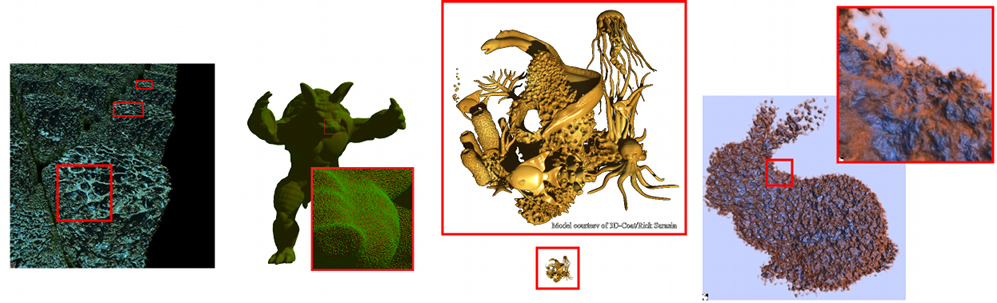

Our team also developed the voxel-based

scalable scene representation GigaVoxels

based on the fact that appearance filtering is well-posed using

voxels contrary to

meshes. Still, it is not sufficient to average sub-voxel data –

e.g., density - to obtain the averaged appearance

of a voxel.

This subject aims at addressing the problem above, by modeling

a shadable representation keeping enough of the subscale meaningful

information. At the scale of a pixel, appearance consists of colors,

opacity, polish and roughness (through highlights and shadowed small

valleys). All these can varies with view and light direction (as for

the distant grid and the corrugated surface mentioned above). These

global attributes may result from different values at subpixel scales

such as colored grains, rough geometry, thin openwork. Similarly,

they must be implicitly accounted for in coarser pixels appearance.

In order to be scalable

through many scales, we need a closed

representation, i.e., whose averaging can be encoded using

itself. In particular, some notions like “normals” are

not scalable but normal

distributions – e.g. lobes – are, and more

generally, histograms – or there statistical momentums. Notions

like « density » or « opacity »

loose too much key-information : for 2 voxels aligned with the

eye, the total opacity should not be the same whether their content

is consistently gathered on voxel-left, or left for first voxel and

right for the second, or scattered within each. So to voxelize a

solid object with correct appearance on the silhouette (partly-filled

voxels) we need a better but compact representation of the occlusion

in voxels. Another common case of correlated data are sheets with 2

different colored faces: seeing one is not seeing the other, so these

colors - but also their lighting - should not be mixed whatever the

pixel coarseness.

Several of the issues above have been addressed

in our previous work (see ref section) for surfaces. In this project

we target volumes of voxels, in order to complete the scalability

(with high quality) of the voxel representation. This will fully

allows real-time high quality walk-through very huge and detailed

scenes: let's break the complexity wall !

Math : skills in integration, Fourier or stats for images and textures would be a plus.

C/C++, OpenGL.

Notions of parallelism (multithreading) or GPU programming (GLSL, CUDA, etc) would be a plus, but these can be learn easily during the practice.

filtering surface appearance « Representing Appearance and Pre-filtering Subpixel Data in Sparse Voxel Octrees » HPG'2012

multiscale voxels : « GigaVoxels: Ray-Guided Streaming for Efficient and Detailed Voxel Rendering » I3D'09

multiscale lighing : « Interactive Indirect Illumination Using Voxel Cone Tracing » CGF'2011