TP3: Advanced shaders

The deadline for this project is: Saturday, December 20, 2013 (Midnight European Time)

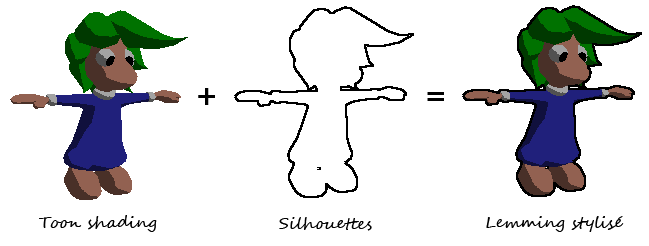

Silhouette detection

Our goal is to add silhouettes to the drawings. We are going to do it through image analysis, then add the result to the toon shading we did in TP1. The key idea is using an edge-detection filter, such as Sobel:

H =

-1 -2 -1

0 0 0

1 2 1

V =

1 0 -1

2 0 -2

1 0 -1

result = sqrt(H² + V²)

Each filter (H and V) means taking the values of the neighboring pixels, multiplying them by the coefficients and adding their contributions. The result is the sum of the square of the two filters.

This filter detects the difference of intensity between neighboring pixels. The larger the difference between the value at this pixel and the values at neighboring pixels, the larger the value of the filter. Since we're interested in silhouettes, we are actually looking at difference of depth, not color. Thus, we will apply the filter on the depth buffer.

But applying the filter can not be done in a single pass with a fragment shader. We need two separate passes. In a first pass, we create the toon shading and the depth map, and render them in a FrameBufferObject (FBO). In the second pass, we render a single quad, covering the entire window. For each fragment of this quad, we are going to access the values computed in the first pass, using gl_FragCoord (which tells us the position of the current pixel).

Try changing the value of the threshold. What are the limitations for this appraoch?

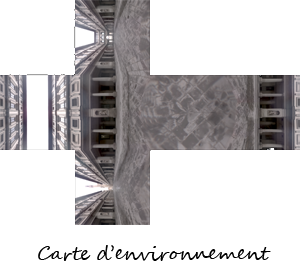

Environment mapping

Environment mapping simulates how an object can reflect (or refract) the environment in real-time, using textures. You can see the environment map as a sphere or a cube enclosing the entire scene. There are several ways to actually encode the environment: cube maps, sphere maps, paraboloid maps... Cube maps are the easiest with OpenGL, and many "cube maps" are available on the net (here and there, for example).

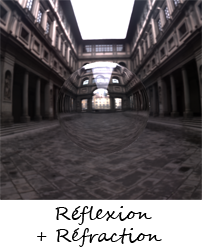

In the fragment shader, use samplerCube to read the pixel from the environnement map in the specified direction. Use reflect to get the reflected direction from the viewpoint, and refract to get the refracted direction from the viewpoint (if your object is made of glass).

You should combine the reflected and refracted colors using Fresnel coefficients, preferably approximated with Schlick's approximation:

F = f + (1 - f) (1 - V • N)5 with f = (1 - n1 / n2)² / (1 + n1 / n2)²

If you see the environment inverted after the refraction, it is normal. That is because we are only considering the first interface for refraction. You can look (on the internet) for ways to approximate the second interface, to get the correct look. If you see a dark circle next to the edges of the sphere, then you placed your indices in the wrong order.

Shadows

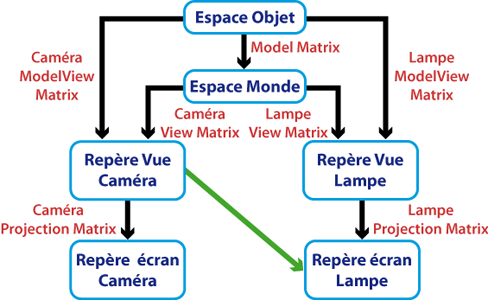

We are going to implement shadow mapping, as seen in the lecture. We need two rendering passes:

- in the first, we render from the light source, and we store the depth map in a FBO (as for silhouette extraction).

- in the second, we render from the camera, and for each pixel, we compare the distance to the light source and the distance we stored in the depth buffer.

The main point is making sure you are in the right coordinate system to access the textures. If the shadows on the ground are not correct, then it's likely you did not take into account the model transform matrix.

Do not test shadow maps with light direction = viewpoint or East, South or West: in these cases, the light direction is parallel to the ground, and the shadows are impredictible

For debugging, start with direct comparison between depths (with no bias). You should see moiré patterns instead of illuminated areas, but you will see the shadow contours with precision. When you're ready to add bias to the depth test, try with either adding bias to the depth, or with multiplying the depth by a constant. See if you can find the smallest possible bias.

Make sure that the lighting is consistent with the shadows: inside the shadow, only ambient lighting, outside the shadows, full lighting as computed in TP1.